Ethics in Machine Learning

Values, Morals & Ethics

Values

Deep rooted beliefs that form the foundation guiding a person's decisions. Some examples:

- integrity

- admits that they stole a cookie

- friendship

- drops everything to help a friend in need

- health

- takes time to work out in the morning

- success

- works late nights to get high grades

Morals

Systems of beliefs that combine values. Often with a social or religious context guiding what is good and bad behaviour.

Sometimes the values can conflict, leading to a moral dilemma, for example compromising your health to get higher grades.

Examples of Morals

- It is bad to steal cookies (based on a value of honesty).

- Helping a friend is a good thing to do (based on a value of friendship).

- It is bad to skip a workout (based on a value of a healthy lifestyle).

- Working late at night is a good thing to do (based on a value of success).

Ethics

Some ethicists consider morals and ethics indistinguishable. Others see ethics as being more about which behaviours are right and wrong. So morals reflect the intentions while ethics code of behaviour.

Ethics can often be an organisation's attempt to regulate behavior with rules based on a shared moral code. You often encounter ethics in professional contexts as a code of conduct.

Examples of Ethics I

- Doctors swear the Hippocratic Oath binding them to "first do no harm" to their patient.

- PETA, the "People for the Ethical Treatment of Animals" activates against the abuse of animals in any way.

- Employees of a company often sign a code of ethics, which includes keeping important matters confidential and not stealing from the workplace — both of which would be fireable offenses.

Examples of Ethics II

- A lawyer is ethically bound to defend their clients to the best of their ability, even if they are morally opposed to their clients' crimes. Breaking this ethical code could result in a mistrial or disbarment.

- A student who helps another student cheat on a test is breaking their school's ethics.

Moots

Hypothetical situations intended to provoke discussion.

The Trolley Problem

Self-driving cars & autonomous vehicles

- Should the morality of a car favour saving its driver and passengers over pedestrians and passengers of other cars?

- MIT's Moral Machine and article

- Should the morality of a car be decided by society or by the owner?

- Would you buy a car with a configurable morality knob?

- A Self-Driving Car Might Decide You Should Die

- The German government's Ethics Commission's complete report on automated and connected driving contains 20 ethical rules.

Data Ethics

This section draws on a chapter from the book Deep Learning for Coders with fastai and PyTorch by Jeremy Howard & Sylvain Gugger which was coauthored by Rachel Thomas.

What could possibly go wrong?

- Machine Learning is powerful. How can it go wrong?

- bugs

- model complexity

- unforeseen application

- Consider the consequences of our choices.

- right and wrong

- actions and consequences

- good and bad individual and societal outcomes

- Why does it matter?

Three case studies in Data Ethics

- Recourse processes: Arkansas’s buggy healthcare algorithms left patients stranded.

- Feedback loops: YouTube’s recommendation system helped unleash a conspiracy theory boom.

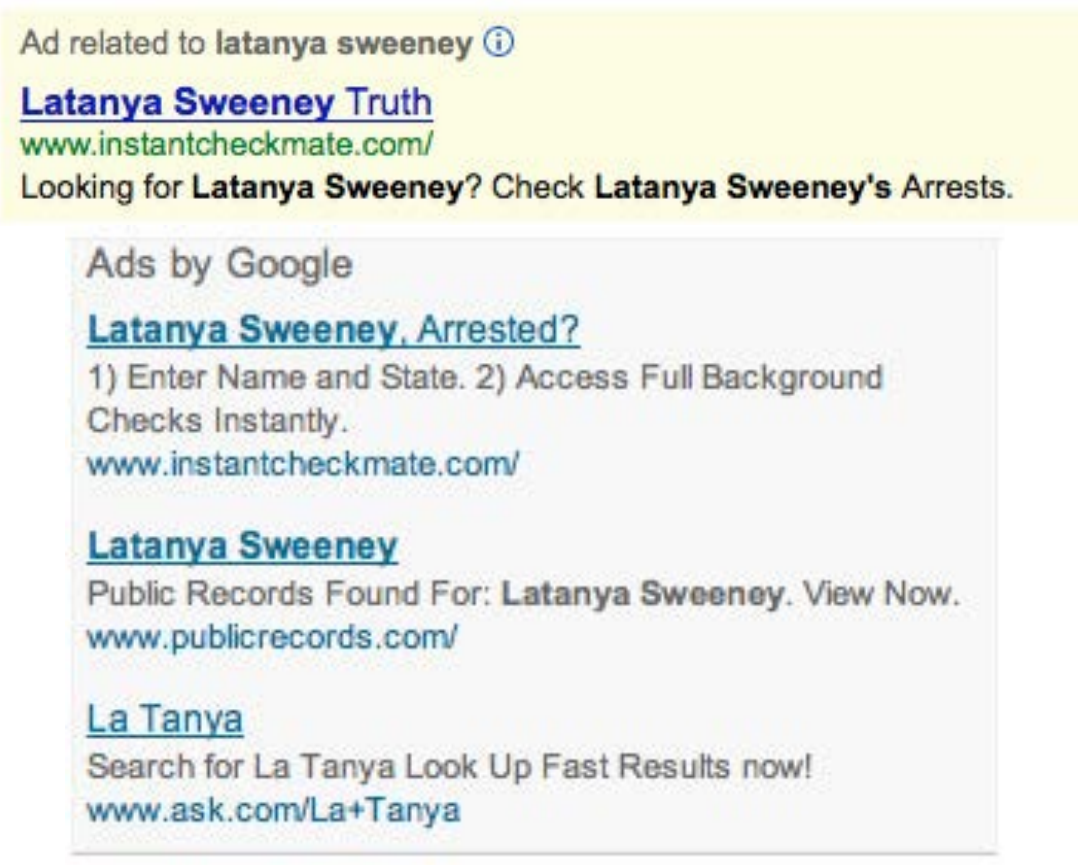

- Bias: When a traditionally African-American name is searched for on Google, it displays ads for criminal background checks.

Recourse processes

- Arkansas’s buggy healthcare algorithms: What happens when an algorithm cuts your health care

- De kinderopvangtoeslagaffaire

Feedback loops

- YouTube’s recommendation system helped unleash a conspiracy theory boom.

- YouTube Unleashed a Conspiracy Theory Boom. Can It Be Contained?

Racial Bias

- When a traditionally African-American name is searched for on Google, it displays ads for criminal background checks.

- Discrimination in Online Ad Delivery

- Latanya Sweeney & Kirsten Lindquist

Why Does This Matter?

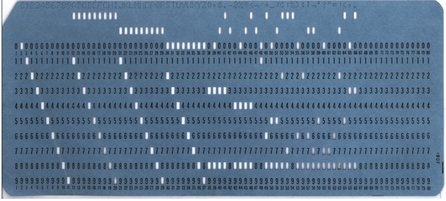

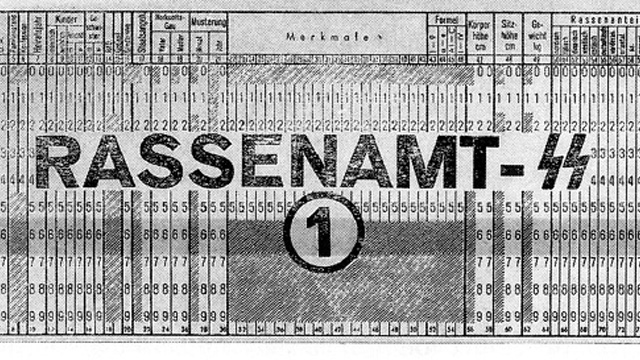

Punched cards for automation

Hollerith card used in Nazi concentration camp

Dehomag poster

IBM CEO Tom Watson Sr. meeting with Adolf Hitler

IBM and the Holocaust

IBM and the Holocaust by investigative journalist Edwin Black

"This book will be profoundly uncomfortable to read. It was profoundly uncomfortable to write."

Machine Learning and Product Design

Figure 1: Members of the U.S. Congress matched to mugshots by Amazon facial recognition software

Amazon facial recognition software

- Overrepresentation of people with black or coloured skin.

- Amazon did not train police departments in how to use their software

- Cross-disciplinary

Topics in Data Ethics

The need for recourse and accountability

“Bureaucracy has often been used to shift or evade responsibility….Today’s algorithmic systems are extending bureaucracy.”

— NYU professor danah boyd

Extending bureaucracy

- Data frequently contains errors

- A police database of suspected gang members in California contained 42 babies under a year old, 28 of which had "admitted being in a gang".

- In 2012 the U.S. Federal Trade Commission found that 26% of consumers had at least one mistake on their files.

- GDPR right of rectification.

- Identity theft. Luton man left shocked as his house is ‘stolen’

Feedback loops

- YouTube's recommendation system.

- Human beings tend to be drawn to controversial content.

- It turns out that the kinds of people who are interested in conspiracy theories are also people who watch a lot of online videos!

A Service for Pedophiles?

- New York Times article from 2019 On YouTube’s Digital Playground, an Open Gate for Pedophiles

- Had YouTube intended to provide a service to paedophiles?

- Algorithm optimising to a metric.

The Feedback Loop

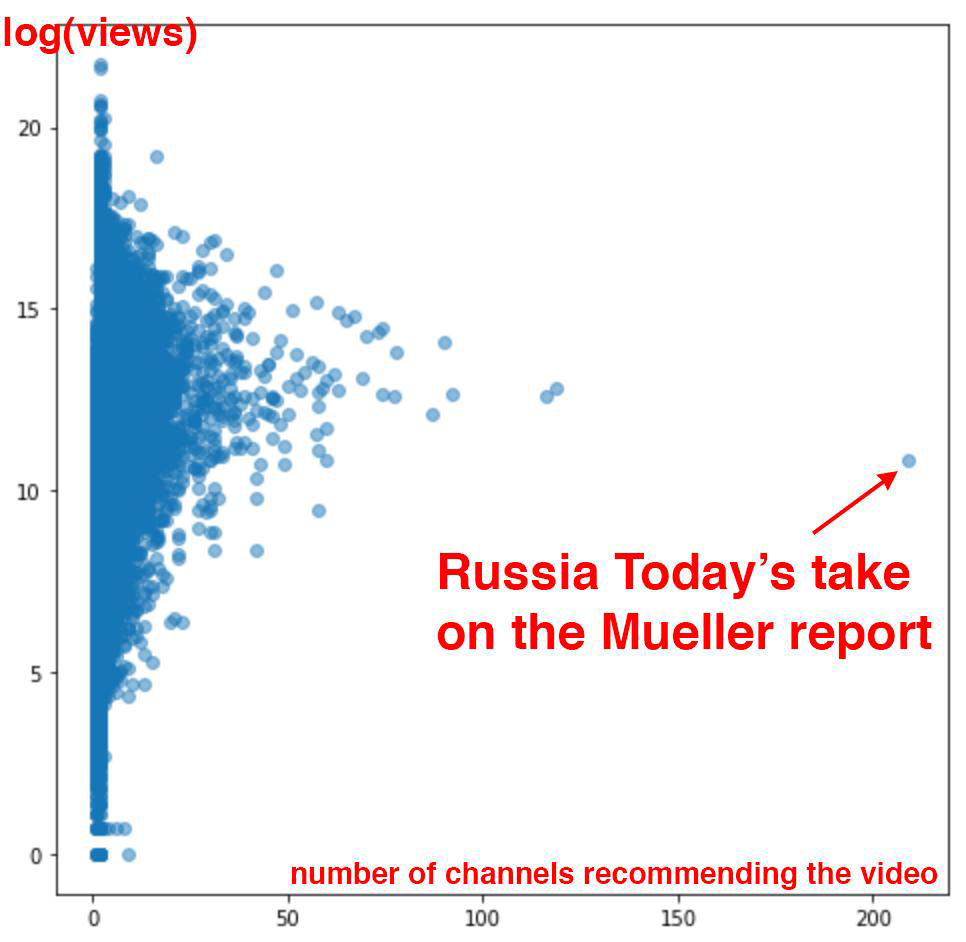

- Former YouTuber Guillaume Chaslot's Algotransparency website.

- The Social Dilemma Netflix documentary.

- Robert Mueller’s “Report on the Investigation Into Russian Interference in the 2016 Presidential Election.”

- Breaking the feedback loop

- Meetup noticed that males are more interested in tech meetings than females are and as a results tech meetings were being recommended to females less. They broke the loop.

Leveraging the Feedback Loop

Figure 2: Number of channels recommending coverage of the Muller report

Bias

- Not statistical bias or machine learning bias but the social science notion of bias.

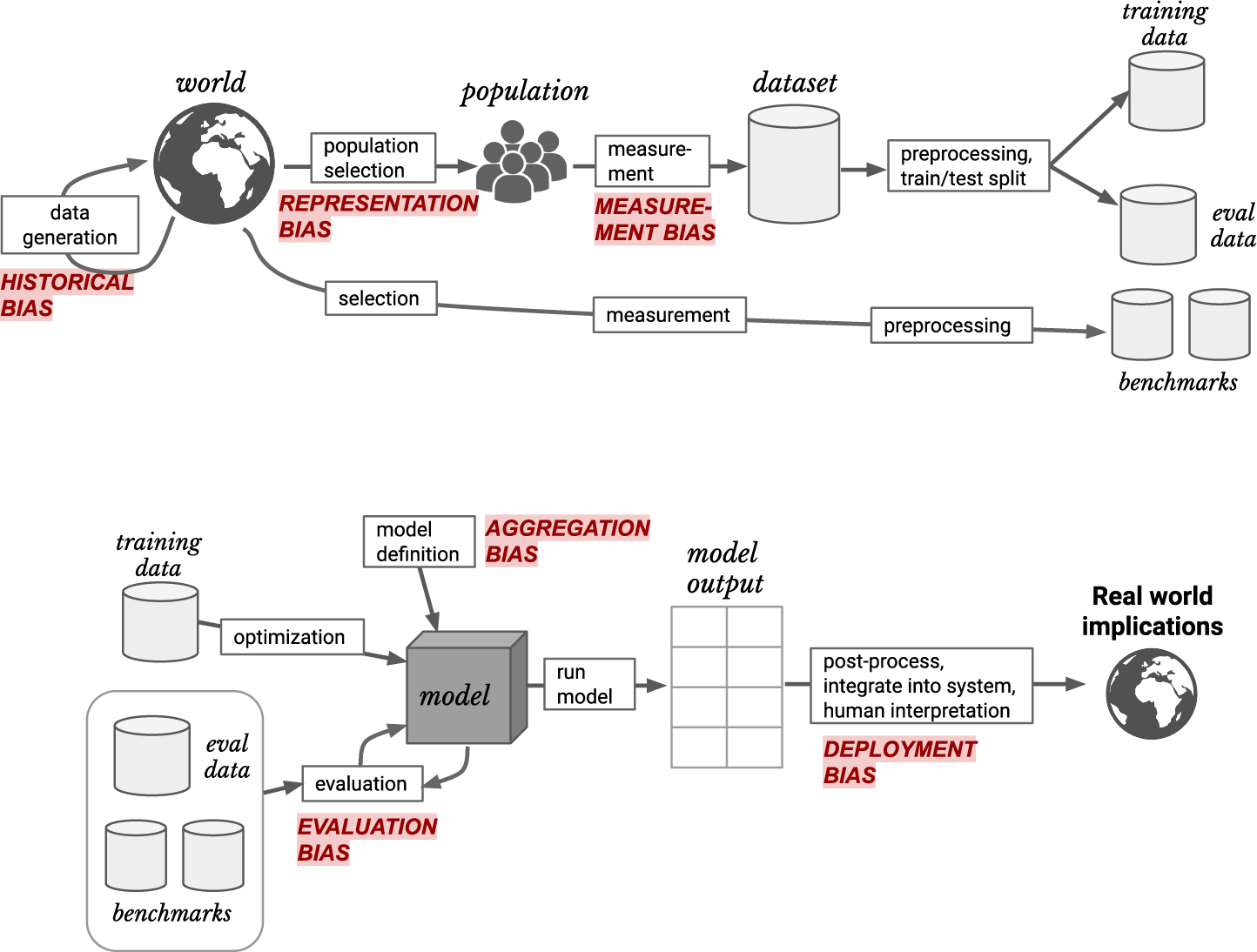

- A Framework for Understanding Unintended Consequences of Machine Learning Suresh and Guttag

Figure 3: Sources of bias in Machine Learning

Historical bias

- Shown identical files, doctors were much less likely to recommend cardiac catheterization (a helpful procedure) to Black patients.

- When bargaining for a used car, Black people were offered initial prices $700 higher.

- Responding to apartment rental ads on Craigslist with a Black name elicited fewer responses than with a white name.

- An all-white jury was 16% more likely to convict a Black defendant than a white one, but when a jury had one Black member, it convicted both at the same rate.

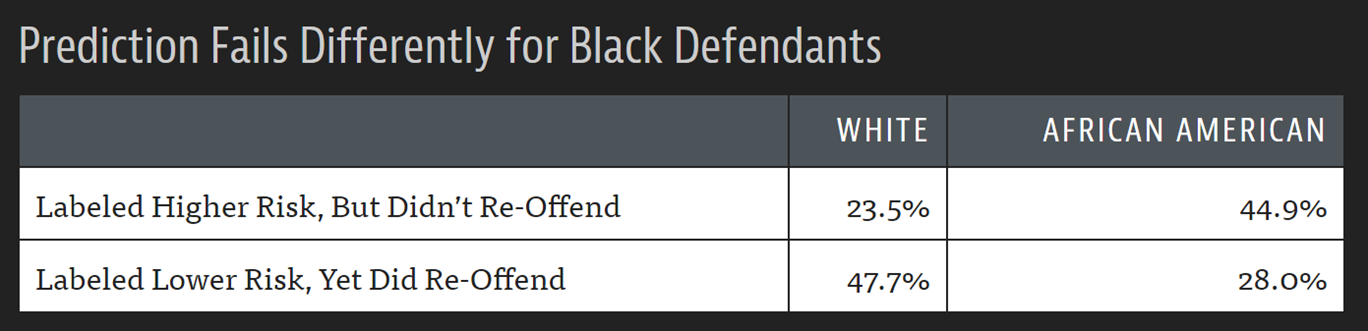

COMPAS

Figure 4: The COMPAS algorithm, widely used for sentencing and bail decisions in the US

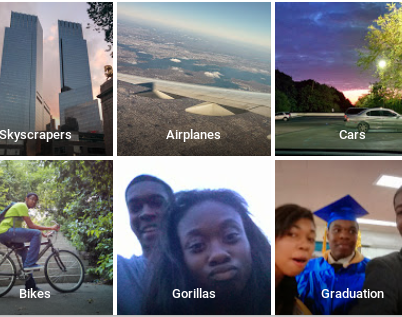

Google Photos autotagging

Figure 5: Google Photos autotagging

Google's first response to the problem

Figure 6: Google's first response to the problem

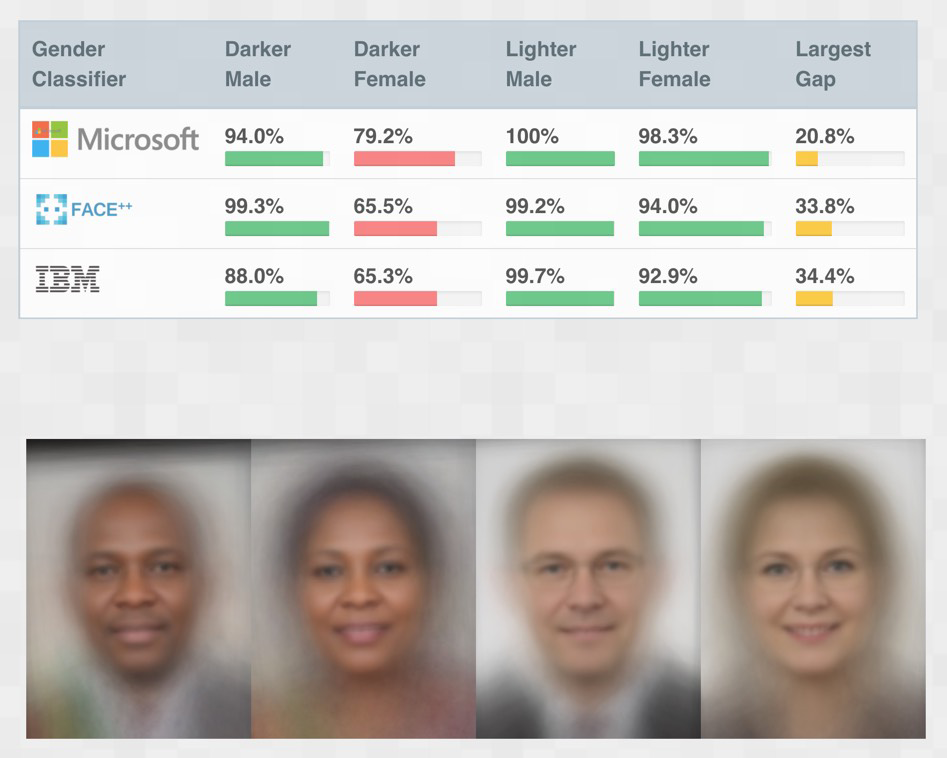

MIT study of facial recognition systems

Figure 7: MIT study of facial recognition systems

Overconfidence

“We have entered the age of automation overconfident yet underprepared. If we fail to make ethical and inclusive artificial intelligence, we risk losing gains made in civil rights and gender equity under the guise of machine neutrality.”

— Joy Buolamwini, MIT

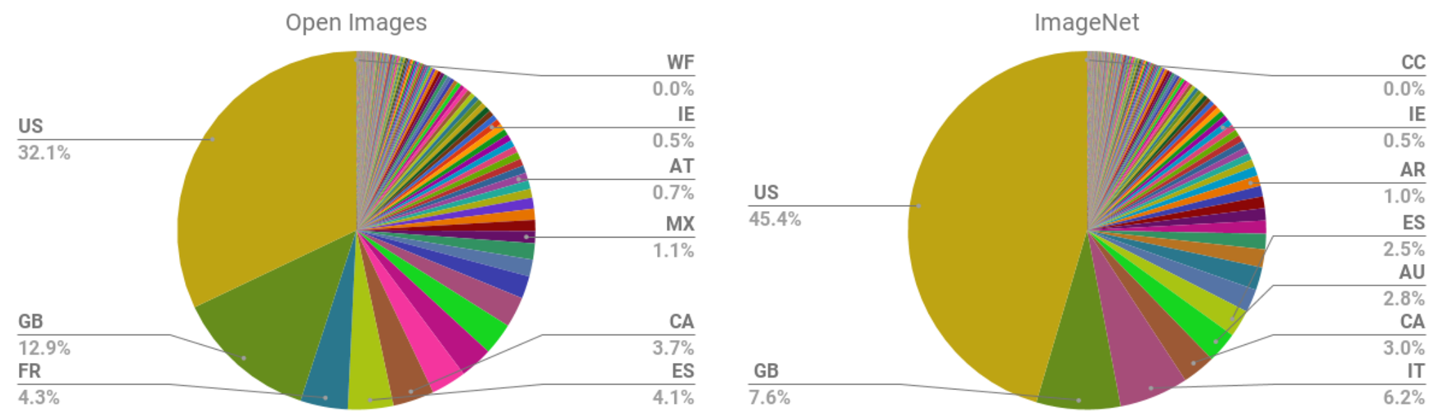

Data Provenance

Figure 8: Provenance of two of the largest online publicly available image data sets

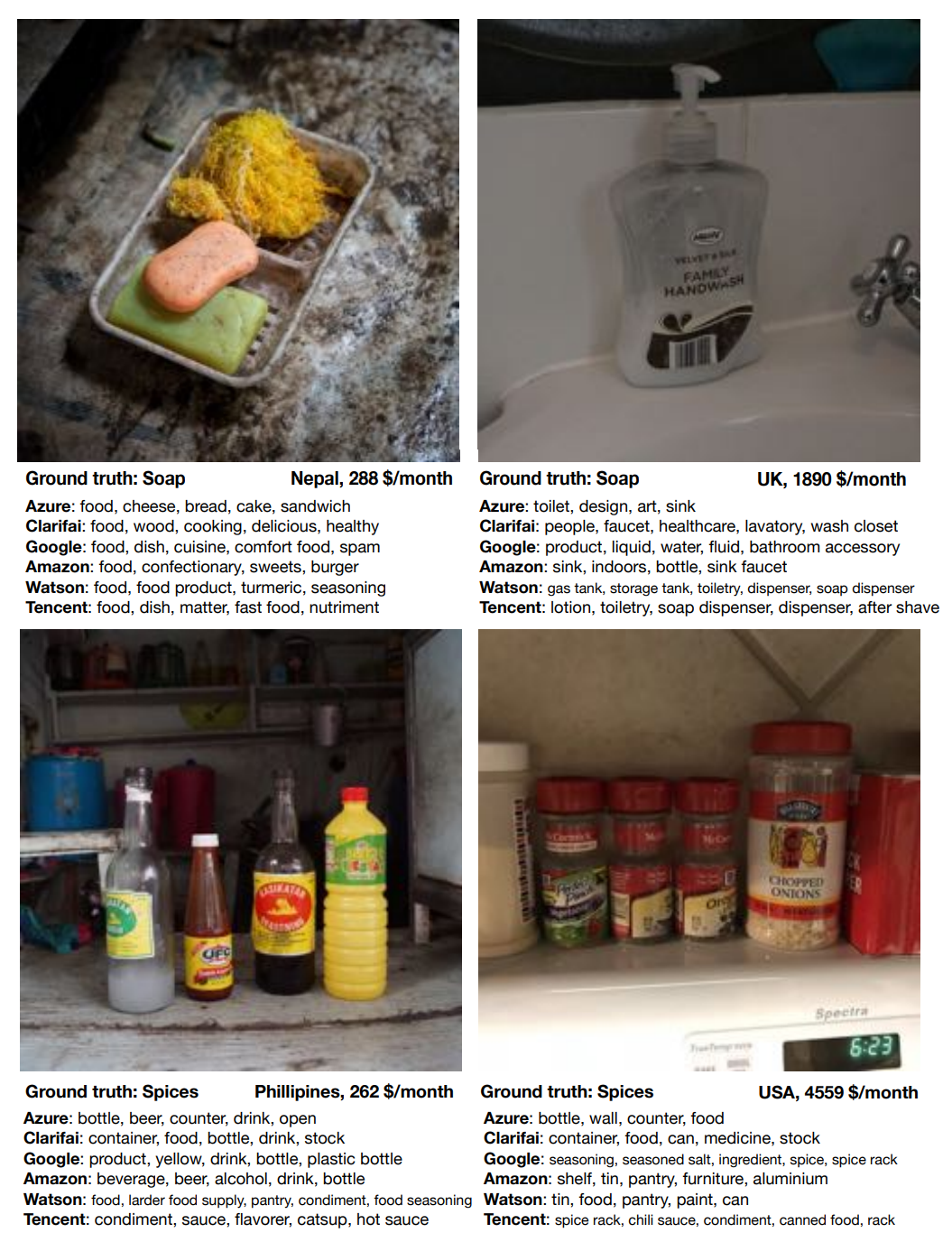

Localization

Figure 9: Object detection in more and less wealthy countries

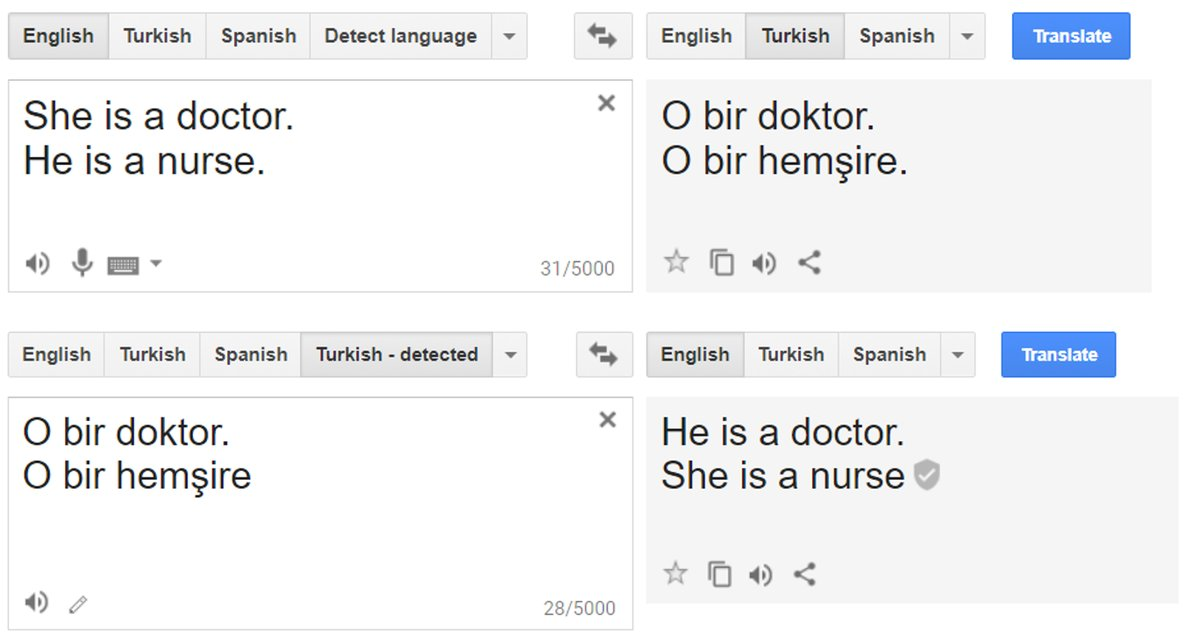

Genderless language

Figure 10: Translating to and from a genderless language

Measurement bias

- From Electronic Health Record (EHR) data, what factors are most predictive of

stroke?

- Prior stroke

- Cardiovascular disease

- Accidental injury

- Benign breast lump

- Colonoscopy

- Sinusitis

Aggregation bias

- Clinical trials

- Diabetes and HbA1c levels

- Ethnicities and genders

Representation bias

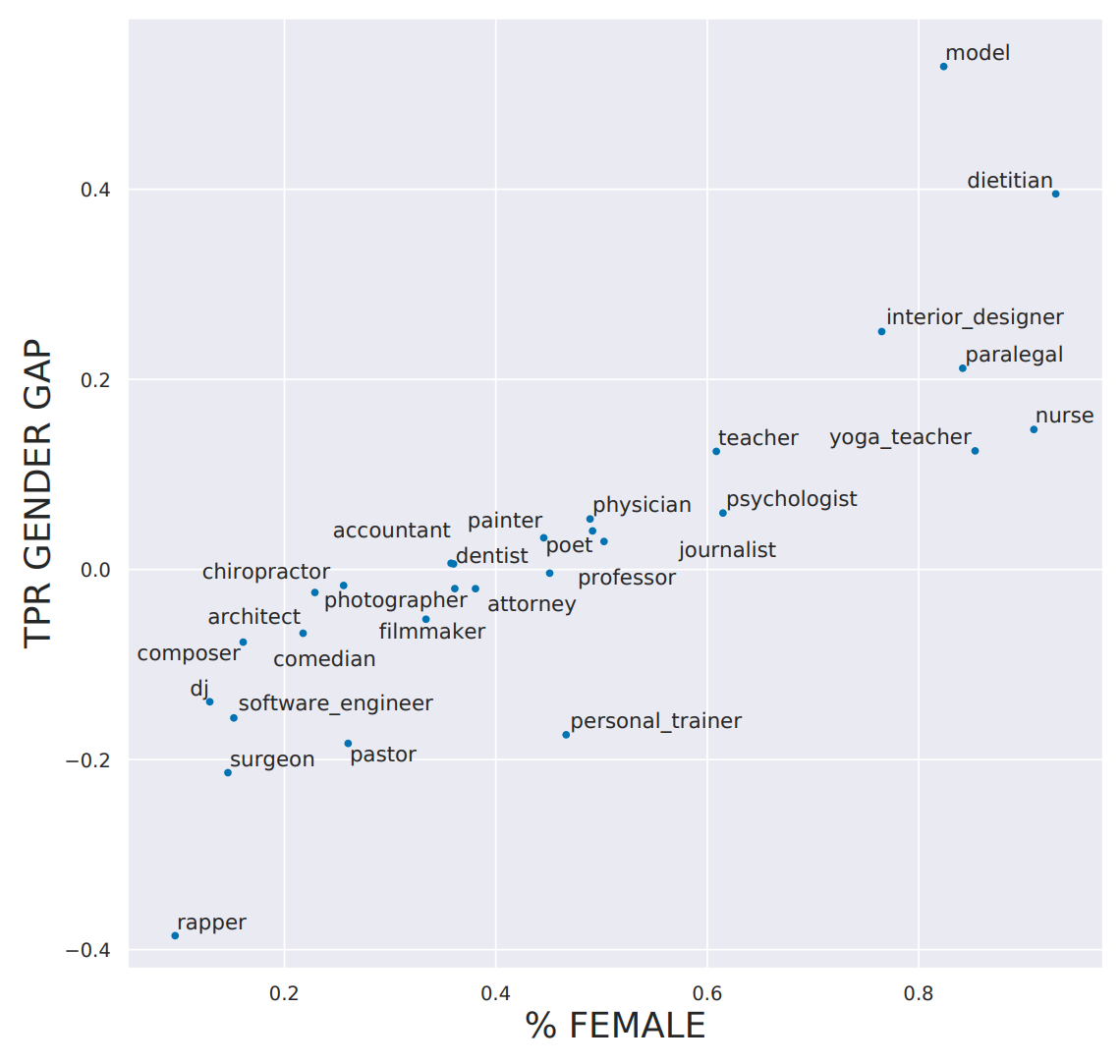

Figure 11: The gender gap in occupations

Addressing bias

- “Humans are biased, so does algorithmic bias even matter?”

- Machine learning is often implemented in practice not because it leads to better outcomes, but because it is cheaper and more efficient.

Weapons of Math Destruction

- Weapons of Math Destruction by Cathy O’Neill: the privileged are processed

by people, whereas the poor are processed by algorithms.

- People are more likely to assume algorithms are objective or error-free (even if they’re given the option of a human override).

- Algorithms are more likely to be implemented with no appeals process in place.

- Algorithms are often used at scale.

- Algorithmic systems are cheap.

Disinformation

“Most campaigns are a carefully designed mixture of facts, half-truths, exaggerations, and deliberate lies.”

— The KGB and Soviet Disinformation, Ladislav Bittman

Heart of Texas

Figure 12: Heart of Texas islamization posting

Large Language Models

Explain Artificial Intelligence in the style of a caveman.

Artificial intelligence, like fire, big rock, and wheel, is powerful magic that make things smarter than they are. It is like lightning that lives inside our machines, making them think and do things like a human. It helps us hunt for food, find our way, and talk to others far away. It is like having a shaman inside our cave telling us what to do and how to stay safe. But just like fire and big rock, we must be careful with AI and use it wisely or it might turn against us.

Identifying and Addressing Ethical Issues

Analyze a project you are working on.

- Should we even be doing this?

- What bias is in the data?

- Can the code and data be audited?

- What are the error rates for different subgroups?

- What is the accuracy of a simple rule-based alternative?

- What processes are in place to handle appeals or mistakes?

- How diverse is the team that built it?

Implement processes at your company to find and address ethical risks.

Ethical lenses

- The rights approach. Which option best respects the rights of all who have a stake?

- The justice approach. Which option treats people equally or proportionately?

- The utilitarian approach. Which option will produce the most good and do the least harm?

- The common good approach. Which option best serves the community as a whole, not just some members?

Questions

- Who will be directly affected by this project? Who will be indirectly affected?

- Will the effects in aggregate likely create more good than harm, and what types of good and harm?

- Are we thinking about all relevant types of harm/benefit (psychological, political, environmental, moral, cognitive, emotional, institutional, cultural)?

- How might future generations be affected by this project?

Questions II

- Do the risks of harm from this project fall disproportionately on the least powerful in society? Will the benefits go disproportionately to the well-off?

- Have we adequately considered “dual-use” and unintended downstream effects? The alternative lens to this is the deontological perspective, which focuses on basic concepts of right and wrong:

- What rights of others and duties to others must we respect?

- How might the dignity and autonomy of each stakeholder be impacted by this project?

- What considerations of trust and of justice are relevant to this design/project?

- Does this project involve any conflicting moral duties to others, or conflicting stakeholder rights? How can we prioritize these?

Increase diversity.

- Less than 12% of AI researchers are women.

- Why Diverse Teams Are Smarter

- According to the Harvard Business Review, 41% of women working in tech leave, compared to 17% of men. An analysis of over 200 books, whitepapers, and articles found that the reason they leave is that “they’re treated unfairly; underpaid, less likely to be fast-tracked than their male colleagues, and unable to advance.”

Types of programmers

- “The types of programmers that each company looks for often have little to do with what the company needs or does. Rather, they reflect company culture and the backgrounds of the founders.” – The number one finding from research by Triplebyte

Fairness, accountability and Transparency

- Fairness and machine learning, Limitations and Opportunities by Solon Barocas, Moritz Hardt, Arvind Narayanan

The Data Revolution

I strongly believe that in order to solve a problem, you have to diagnose it, and that we’re still in the diagnosis phase of this. If you think about the turn of the century and industrialization, we had, I don’t know, 30 years of child labor, unlimited work hours, terrible working conditions, and it took a lot of journalist muckraking and advocacy to diagnose the problem and have some understanding of what it was, and then the activism to get laws changed. I feel like we’re in a second industrialization of data information.

— Julia Angwin, senior reporter at ProPublica

Reading

- Weapons of Math Destruction by Cathy O'Neil

- The Age of Surveillance Capitalism by Shoshana Zuboff

- An Ugly Truth: Inside Facebook's Battle for Domination by Sheera Frenkel and Cecilia Kang

- Privacy Is Hard and Seven Other Myths: Achieving Privacy through Careful Design by Jaap-Henk Hoepman

- Breaking the Social Media Prism: How to Make Our Platforms Less Polarizing by Chris Bail

- The Alignment Problem by Brian Christian (2020).

Reading II

- Je hebt wél iets te verbergen - Maurits Martijn & Dimitri Tokmetzis (in Dutch)

- Het is oorlog maar niemand die het ziet by Huib Modderkolk, translated into English as There's a War Going On But No One Can See It

- AI Superpowers, China, Silicon Valley and the new world order by Kai-Fu Lee

- In The Plex: How Google Thinks, Works, and Shapes Our Lives by Steven Levy

- Facebook: The Inside Story by Steven Levy

Reading III

- Ethical Data and Information Management: Concepts, Tools and Methods by Katherine O'Keefe and Daragh O Brien.

- Automating Inequality by Virginia Eubanks

- Invisible Women: Data Bias in a World Designed for Men by Caroline Criado Pérez

- Data Feminism by Catherine D’Ignazio and Lauren F. Klein

- Race After Technology: Abolitionist Tools for the New Jim Code by Ruha Benjamin